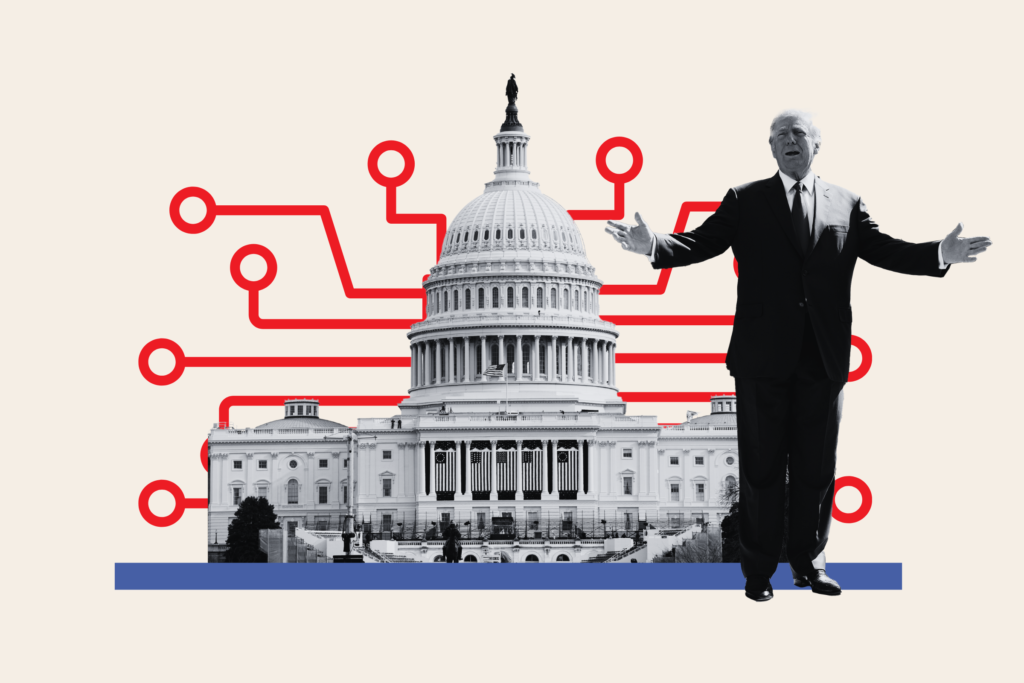

Donald Trump’s return to office has raised questions over potential changes to artificial intelligence policy in the United States.

The president-elect has promised to dismantle incumbent President Joe Biden’s landmark AI executive order and to establish a Department of Government Efficiency, nicknamed DOGE, led by Elon Musk.

Experts suggest that state-level regulations and the drive for AI innovation will likely continue regardless of federal oversight changes, though concerns remain about balancing technological advancement with safety and ethical standards.

Newsweek contacted Trump via email for comment.

Here, experts share their thoughts on how the AI industry might be affected by Trump’s second term.

Where Does AI Policy Currently Stand?

The U.S. government’s current AI policy attempts to balance innovation with safety, security and ethical standards through several key initiatives, including the U.S. Artificial Intelligence Safety Institute Consortium (AISIC) established in February 2024 and the executive order on AI that Biden signed into law in October 2023.

Titled “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence,” the order outlined measures to govern AI development and deployment. It mandated federal agencies to establish standards for AI safety and security, protect privacy and promote equity. It also required the creation of a chief artificial intelligence officer position within major federal agencies to oversee AI-related activities.

Before this order came along, the “Blueprint for an AI Bill of Rights” was released by the White House Office of Science and Technology Policy (OSTP) in October 2022. It proposed five principles to protect the public from harmful or discriminatory automated systems.

These principles include ensuring safe and effective systems, protecting against algorithmic discrimination, safeguarding data privacy, providing notice and explanation, and offering human alternatives and fallback options. Much of this was incorporated into the executive order, which Trump has already promised to repeal when he gets back into office.

“In some sense, the status quo for AI regulation is that there is none. Essentially, the goal of the [executive] order and the AI Safety Institute was to cultivate a conversation at the federal level around what does safe AI mean? What does trustworthy AI mean?” Dr. Jason Corso, professor of robotics and electrical engineering and computer science at the University of Michigan, told Newsweek.

“I think there was a growing appreciation that in order to build widespread adoption in AI, one really needs more trust in AI and these regulations or these initiatives would have helped cultivate that conversation,” he added.

How Could State Laws Around AI Be Affected?

Dismantling federal regulations, however, doesn’t change state laws. California, for example, has the AI Transparency Act, signed into law by Governor Gavin Newsom in September 2024.

This act mandates that providers of generative AI systems make available an AI detection tool, offer users the option to include a manifest disclosure indicating content is AI-generated, include a latent disclosure in AI-generated content and enter into contracts with licensees to maintain the AI system’s capability to include such disclosures.

“A Trump administration might ease federal AI regulations to boost innovation and reduce what they might perceive as regulatory burdens on businesses, however, this wouldn’t impact state laws like those that have recently passed in California,” Sean Ren, associate professor in computer science at University of Southern California (USC) and CEO of Sahara AI, told Newsweek.

“This could create a patchwork of rules, making it more complex for businesses operating nationally to stay compliant. While companies may see less federal red tape, they’ll still face varying state regulations,” he added.

With the lack of federal rules specifically targeted at frontier AI companies, Markus Anderljung, director of policy and research at the Centre for the Governance of AI and adjunct fellow at the Center for a New American Security (CNAS), told Newsweek the biggest difference “will be intensified efforts to make it easier to build new data centers and the power generation, including nuclear reactors, needed to run them.”

Beyond the potential dismantling of the executive order, “I expect the biggest difference will be on social issues, stripping out anything that’s seen as woke. With other things, I think you can expect more continuity,” Anderljung said of possible changes when Trump returns.

“There’s bipartisan consensus on the importance of supporting the U.S. artificial intelligence industry, of competing with China, of building out U.S. data center capacity,” he added.

This focus on competing with China was an element of the previous Trump administration, which “started imposing stricter AI-related export controls on China, beginning with the controls on [telecoms firm] Huawei, followed by export controls on semiconductor manufacturing tools,” said Anderljung.

“This suggests a new Trump administration might keep or strengthen controls imposed since then by the Biden administration, especially in light of recent reports of Huawei chips being produced by TSMC [Taiwan Semiconductor Manufacturing Company],” he added.

Where Does Musk Fit Into Trump’s Plans?

With the announcement of the creation of DOGE, to be headed by Musk, AI regulation—or deregulation—could potentially come under the Tesla boss’ remit.

“Elon Musk’s role as CEO of AI-driven companies like Tesla and Neuralink presents inherent conflicts of interest, as policies he helps shape could directly impact his businesses,” said Ren.

“This complicates any direct advisory role he might take on. That said, Musk has long advocated for responsible AI regulation and could bring valuable insights to AI policy without compromising the public interest.”

However, given the complexity of AI policy, Ren believes that “effective guidance requires more than just one mind,” adding that “it’s essential to have experts from multiple fields to address the diverse ethical, technological and social issues involved.”

Ren suggested that to better address AI issues, Trump could establish a multi-adviser panel that includes experts from academia, technology and ethics, balancing Musk’s influence and ensuring a well-rounded perspective.

Continued AI Innovation

Ultimately, pointing to the relative lack of federal regulations around AI, particularly in comparison to the EU, Corso thinks that a second term of Trump won’t necessarily change levels of innovation around AI, and that cultivating an environment of safe and trustworthy AI relies more on individual players in the space.

“There’s already a huge amount of innovation in AI. I don’t think regulation was really going to play an impact in that. There will continue to be a huge amount of innovation in AI,” he said.

“I think what we need to see is more companies, more leaders stepping up to share the work openly. My view is that an open ecosystem for AI, means safer, faster innovation.”

“There is intense value in cultivating an open ecosystem, in which people will share their thoughts, their understandings, their models, their data and so on.”

Read the full article here